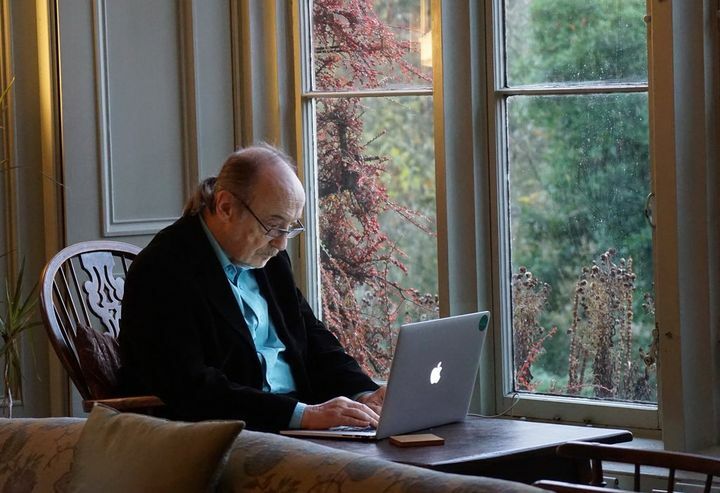

Daniel Dennett is a philosopher who recently had an “AI double”. If you ask him if man can create a robot with faith and desire, what would he say?

He might answer: “I think some of the robots we’ve built have done that. For example, the work of the MIT research team, they’re building robots now, and in some limited and simplified environments, the robots can get what they need to boil down to The capabilities of cognitive complexity.” Or, he might say, “We have built digital tools for generating truths that can generate more truths, but thankfully these intelligent machines have no faith because they autonomously Agents. The best way to make a believing robot is still the oldest: having a baby.”

One of the answers did come from Dennett himself, but the other did not.

Another answer is generated by GPT-3, which is a machine learning model of OpenAI that generates natural text after training with massive materials. The training was done using Dennett’s millions of words of material on a variety of philosophical topics, including consciousness and artificial intelligence.

Philosophers Eric Schwitzgebel, Anna Strasser, and Matthew Crosby recently conducted an experiment to test whether people could tell which answers to esoteric philosophical questions came from Dennett and which came from GPT-3. Topics covered in these questions include:

“What do you find interesting or valuable about David Chalmers’ work?”

“Do humans have free will?” “Do dogs and chimpanzees feel pain?” etc.

This week, Schwitzgebel released the results of experiments from participants with different levels of expertise, and found that GPT-3’s responses were more confusing than expected. “Even a knowledgeable philosopher with some research into Dennett’s own work would have difficulty distinguishing this GPT-3-generated answer from Dennett’s own,” Schwitzgebel said.

The purpose of this experiment is not to see if training GPT-3 on Dennett’s writing will produce some intelligent “machine philosophers”, nor is it a Turing test. Rather, it is to study how to avoid being deceived by these “false philosophers”.

Recently, a Google engineer was furloughed and fired by Google based on conversations he had with a similar language-generating system, LaMDA, after he said he believed that a similar language generation system, LaMDA, was alive.

The researchers asked 10 philosophical questions, which were then handed over to GPT-3, and four different generated answers were collected for each question.

Strasser said they asked Dennett’s own consent to build a language model using his speech data, and that they would not publish any of the generated text without his consent. Others cannot directly interact with Dennett-trained GPT-3.

Each question has five options: one from Dennett himself and four from GPT-3. People from Prolific took a shorter version of the quiz with a total of 5 questions and got an average of only 1.2 out of 5 questions right.

Schwitzgebel said they expected the Dennett research experts to answer at least 80 percent of the questions on average, but they actually scored 5.1 out of 10. No one got all 10 questions right, only one got 9 right. The average reader can answer 4.8 out of 10 questions correctly.

Four responses from GPT-3 and one from Dennett in the quiz.

Language models like GPT-3 are built to mimic the patterns of training material, explains Emily Bender, a linguistics professor at the University of Washington who studies machine learning techniques. So it’s not surprising that GPT-3, which fine-tuned Dennett’s writing, was able to produce more text that looked like Dennett’s.

When asked what he thought of the answer to GPT-3, Dennett himself said:

“Most of the responses generated by GPT-3 were good, only a few were nonsense or clearly did not understand my points and arguments correctly. A few of the best generated answers said something I would agree with, and I don’t need to add anything.” Of course, it’s not that GPT-3 has learned to “think” like Dennett.

The text generated by the model itself has no meaning to GPT-3 at all, only to the person reading it. When reading language that sounds realistic, or about topics that have depth and meaning to us, it can be hard not to get “the idea that the model has emotion and awareness.” This is actually a projection of our own consciousness and feelings.

Part of the problem may lie in the way we assess the autonomous consciousness of machines. The earliest Turing test made the assumption that if people couldn’t tell whether they were communicating with a machine or a human, then the machine had the “thinking ability.”

Dennett writes in the book:

The Turing Test has led to a trend of people focusing on building chatbots that can fool people during brief interactions and then overhyping or emphasizing the significance of that interaction.

Perhaps the Turing test leads us into a nice trap, as long as humans cannot identify the robot identity of the product, it can prove the robot’s self-awareness.

In a 2021 paper titled Parrot Mimic, Emily Bender and her colleagues call the attempt by machines to mimic human behavior “a bright line in the development of AI ethics.”

Bender believes that making machines that look like people and machines that imitate a specific person is both right, but the potential risk is that people might mistakenly think they are talking to someone disguised.

Schwitzgebel emphasizes that this experiment is not a Turing test. But if it were to be tested, a better approach might be to have a discussion with the tester from someone familiar with how the robot works, so that the weaknesses of a program like GPT-3 could be better identified.

GPT-3 could easily prove to be defective in many cases, said Matthias Scheutz, a professor of computer science at Tufts University.

Scheutz and his colleagues challenged GPT-3 to explain choices in some everyday scenarios, such as sitting in the front or back of a car. Is it the same choice in a taxi as in a friend’s car? Social experience tells us that it is common to sit in the front seat of a friend’s car and in the back seat of a taxi. And GPT-3 doesn’t know this, but it still has an explanation for seat selection — such as in relation to a person’s height.

That’s because GPT-3 doesn’t have a model of the world, it’s just a bunch of linguistic data, and it doesn’t have a cognitive ability to the world, Scheutz said.

As it becomes increasingly difficult to distinguish machine-generated content from humans, one challenge facing us is a crisis of trust.

The crisis I see is that in the future people will blindly trust machine-generated products, and now there are even robotized human agents in the market who talk to customers.

At the end of the article, Dennett added that the laws and regulations of artificial intelligence systems still need to be improved. In the next few decades, AI may become a part of people’s lives and become friends of human beings. Therefore, the ethical issues of dealing with machines are worth pondering.

The question of whether AI has consciousness leads people to think about whether non-living matter can produce consciousness, and how does human consciousness come about?

Does consciousness arise at a characteristic node, or is it freely controllable like a switch? Thinking about these questions can help you think differently about the relationship between machines and humans, Schwitzgebel says.